Kathmandu, Nepal | February 24, 2025

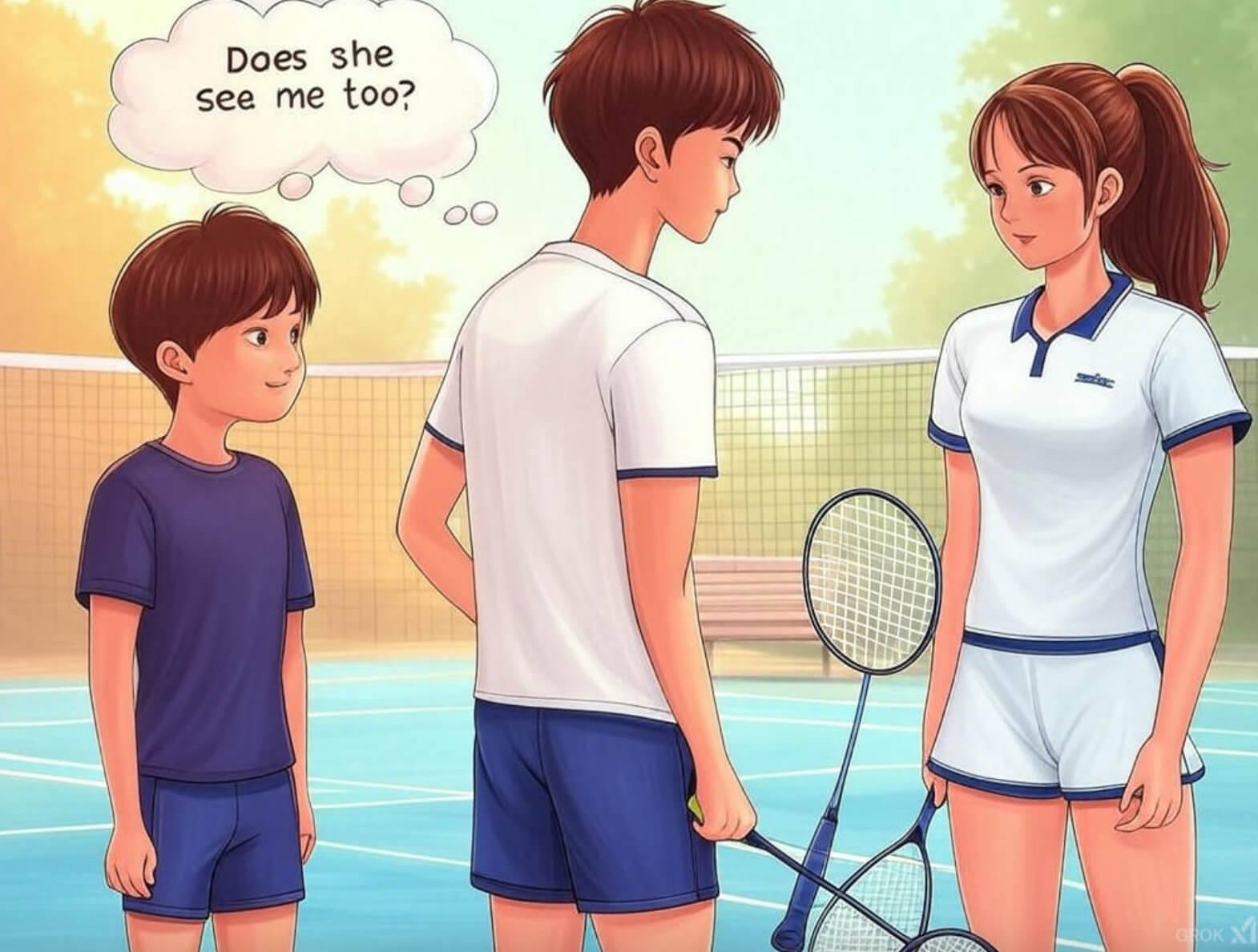

Back in high school, I earned the nickname “King of Unspoken Glances”—a title I wore with a shy grin. I had crushes on nearly every girl I met, from Lovedeep & Pooja, who smashed shuttlecocks on the badminton court, to Ritika, the untouchable star who aced every test. My mind spun stories—imagined glances returned, whispers of “maybe she likes me too.” Most of it? Pure hallucination. I’d see a smile where there was none, hear flirtation in a simple “hi.” But those hallucinations fueled my teenage dreams, even if they weren’t real.

Fast forward—I’m now a father in Kathmandu, chasing a new dream: using Grok 3, xAI’s “scary smart” AI trained on a 200,000 Nvidia GPU cluster in Memphis, to build disaster preparedness for Nepal’s quakes and floods. And here’s the twist—Grok sometimes hallucinates too, spitting out wild facts like “Kathmandu flooded yesterday!” when I ask about monsoon risks. It got me thinking: what’s the deal with AI hallucinations versus human ones? And can we harness this concept of hallucination to make LLMs like Grok work better—not just for tech geeks, but for folks like me trying to save lives in the Himalayas?

What’s a Hallucination, Anyway?

Human hallucinations are the brain’s wild creations—seeing, hearing, or believing things that aren’t there. Sometimes it’s a trick of the mind—like my high school self “seeing” Ritika blush when she didn’t—or something deeper, like schizophrenia’s vivid voices, which 70% of patients experience as real (per studies). It’s messy, tied to dopamine, emotions, memories. Ever misremembered a crush’s words so hard you swore they happened? That’s human hallucination—25-50% of our memories shift over time, especially emotional ones.

AI hallucinations? Cleaner, but still wild. They’re when models like Grok generate stuff that’s wrong but sounds legit—like saying “Lovedeep won Wimbledon” because it mashed up badminton stats with tennis dreams. It’s not deceit; it’s math—statistical patterns in training data (think a trillion words!) misfiring. Grok 3, launched February 17, 2025, might claim “Nepal’s monsoon killed 10,000 last year” when the real number’s 200. Why? It’s stitching threads—flood data, death stats, Nepal news—into a confident but off-base quilt.

Human vs. AI: A Tale of Two Hallucinations

Our chat history sparked this—I asked Grok if humans hallucinate more than AI, and the answer flipped my perspective. Humans do it constantly—pareidolia (faces in clouds), confirmation bias (my crush meant something), or just daydreams. My unspoken glances? Half were illusions I built—Ritika smiling across the classroom wasn’t always real, but it felt true. Science backs it: stress, emotions, even sleep loss (10-20% hallucinate after 48 hours awake) twist our reality. It’s biological—our brains fill gaps, often beautifully, sometimes painfully.

AI, though? Grok’s hallucinations are colder—data glitches, not dreams. If I ask, “Predict Kathmandu’s next quake,” it might say “Tomorrow, 6.5!”—not because it feels, but because seismic patterns and a stray X post got overblown. Unlike humans, AI doesn’t live its errors—it’s fixable with better data or guardrails. Human hallucinations are baked in; Grok’s are patchable—though xAI’s 500 teraflops don’t guarantee perfection.

Hallucination as a Tool: Nepal’s Quake Pilot

Here’s where it gets wild—I’m working with a buddy on a Grok 3 pilot to tackle Nepal’s disaster bottlenecks: weak early warning systems (EWS), poor coordination, low public awareness. Quakes like 2015’s 7.8 (9,000 dead) hit hard ‘cause alerts lagged. So we’re scripting Grok to detect a fake 6.2 quake, text 10 crewmates “Evac!” in 8 seconds, coordinate aid tasks, and run SMS drills—“Fake quake, practice!”

But what if we flipped hallucinations into a feature? Human hallucinations—like my daydreams—spark creativity. What if Grok “hallucinated” disaster scenarios for us? Not errors, but simulations—“If 6.5 hits Kathmandu tomorrow, 20% buildings fall.” It could pre-plan evac routes, task aid teams, SMS drills—all before the real quake. Instead of fearing AI hallucinations, we’d harness them—controlled, useful imagination.

Making LLMs Better with Hallucination

Here’s my pitch—LLMs like Grok can learn from the concept of hallucination to work better, especially for real-world stuff like Nepal’s disasters:

- Simulated Scenarios: Train Grok to “hallucinate” crisis what-ifs— “Flood hits Pokhara, 50mm rain!”—based on USGS or Weather Underground data. It’d model outcomes (e.g., “1,000 displaced”), prep SMS alerts, sync aid teams—all proactively.

- Adaptive Filters: Human hallucinations shift with context—my Ritika blush faded when I saw her focus wasn’t me. Grok could mimic that—filter outputs by user need. Disaster prep? Stick to data. Storytelling? Hallucinate a bit— “Lovedeep serves an ace!”—for fun.

- Feedback Loops: We humans learn—my high school dreams adjusted when reality hit. Grok can too—log “wrong” hallucinations (e.g., “10,000 monsoon deaths”), let users flag ‘em, retrain on truth. X posts help—real-time Nepal flood chatter could ground Grok’s guesses.

Love, Quakes, and Hallucination’s Edge

Funny thing—hallucination ties my worlds together. That love letter I WhatsApp’d Ritika? (Still waiting, 3-4 days to go!) It’s my heart, shaped by Grok’s NLP—a “controlled hallucination” of words I felt but couldn’t pen. If she replies, maybe my high school glances weren’t all illusion. And this quake pilot? Grok’s “hallucinations” could save lives—imagining disasters to dodge them.

AI and human hallucinations aren’t so different—they’re glitches of imagination. But where humans live theirs, AI can learn from them. Until then, I’m Lil in Kathmandu, glancing at my phone, hoping Ritika writes back, and scripting Grok to keep my city safe.